In every business—from the boardroom to the factory floor – conversations are dominated by questions surrounding how generative AI will change how we will work going forward. Contracting professionals are weighing in early and often. 1According to Gartner’s 2023 Hype Cycle for Emerging Technologies, “inflated expectations” are at their highest for generative AI right now – and you’ve probably felt those puffed-up hopes (or fears?) yourself2 -- like these:

“Generative AI will write all our contracts for us.”

“Generative AI will handle all negotiations.”

“Generative AI will take our jobs.”

“Generative AI will save our jobs.”

But, in reality, we are still very early in the game for applying generative AI to business processes, including contracting. Recently, a World Commerce & Contracting survey discovered that only 21% of respondents have “fully integrated AI into their processes;” 61% of respondents aren’t using AI at all.

“It’s evident,” the WorldCC’s Sally Guyer observed, “that AI’s footprint in contracting is in its infancy.”

That’s not an anomaly for contracting. Gartner expects generative AI to deliver “transformational benefit” in two to five years. Between now and then, we can expect to endure a “trough of disillusionment” where organizations experiment with applying generative AI to processes and hit the roadblocks common to any new technology.

“As the technologies in this Hype Cycle are still at an early stage, there is significant uncertainty about how they will evolve,” Melissa Davis, VP Analyst at Gartner, said about generative AI and other emerging tech. “Such embryonic technologies present greater risks for deployment, but potentially greater benefits for early adopters.”

The uncertainty is real. Speaking with business leaders about applying generative AI to their processes, it is clear there are more questions than answers.

With that said, some clear early learnings are emerging that can help ground conversations about how to approach deploying generative AI3 to improve contract outcomes when a company is ready.

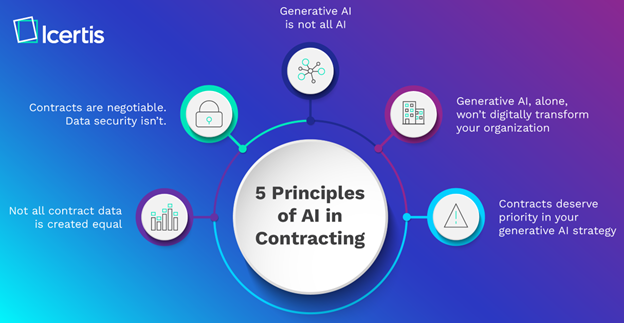

Five learnings that stand out the most are these.

1. Not all contract data is created equal

To greatly oversimplify matters, large language models work by applying language patterns to “predict” what word will come next in a sentence. Having been trained on all the public data on the internet, large language models like ChatGPT are incredibly versatile in being able to mimic different speech patterns and summarize information.

Yet querying “the world’s data” alone comes with drawbacks—namely, the information that it returns may not relate to your specific context, and by definition, such information can’t give you results based on internal corporate documents or other internal knowledge bases.

In a recent Harvard Business Review article4, Tom Davenport and Maryam Alavi highlighted this as a major challenge – and opportunity – for organizations looking to get the most out of generative AI:

“Many companies are experimenting with ChatGPT and other large language or image models. They have generally found them to be astounding in terms of their ability to express complex ideas in articulate language. However, most users realize that these systems are primarily trained on internet-based information and can’t respond to prompts or questions regarding proprietary content or knowledge. Leveraging a company’s propriety knowledge is critical to its ability to compete and innovate, especially in today’s volatile environment.” 4

This is particularly true for contracts. While some material corporate agreements are publicly disclosed and thus exposed to large language models, chances are that the bulk of your organization’s contracts are closely guarded secrets—that is, not available on the open web for consumer-grade generative AI models to reference.

Several approaches are available for solving this challenge. The first is for companies to build their own large-language models, which is not a practical solution for most organizations. Another is to start with publicly available large language models and then “tune” them with proprietary datasets, though this is also a heavy lift.

The most practical approach is to use large-language data sets as is, expose those models to your proprietary data, then engineer prompts to generate desired results that pull in from multiple data sets. Note that you may not need a crack prompt engineer on staff to take advantage of this approach. Many generative AI offerings will come pre-packaged with pre-set prompts that do the engineering for you. All you need to do is provide the relevant data—for example, your proprietary contract portfolio -- in a secure setting (more on the security factor in a moment).

Whatever path you take, as you start your conversations about generative AI, consider what data pools you plan to leverage and what results it will return. This will help ensure that once generative AI is live in your organization, it is generating the competitive advantage you are looking for.

2. Contracts are negotiable. Data security isn’t.

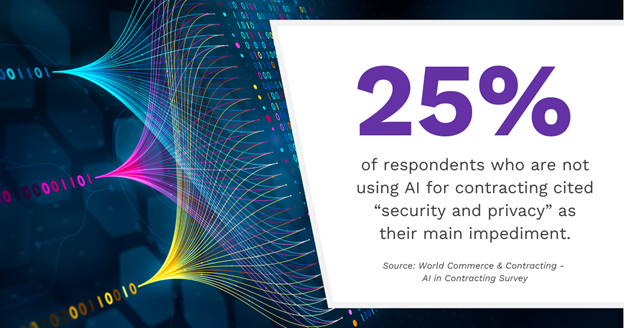

According to the recent WorldCC AI survey, data security represents the biggest barrier to adopting generative AI. Twenty-five percent (25%) of respondents who are not using AI for contracting cited “security and privacy” as their main impediment. Seventeen percent (17%) of respondents said their organizations have banned generative AI tools altogether.

Although serious doubts exist about the wisdom of outright bans of generative AI, the caution exercised by these IT departments is understandable.

Given the sensitivity of contract data regardless of an organization’s broader posture, contracting professionals must show extra caution. Many consumer-grade large language models, including ChatGPT, by default use the information users send it to further train its models. GenAI advocates will claim that this information is deconstructed in a way that renders them all but meaningless, but many IT departments remain unconvinced—and as a number of business headlines attest, with good reason. (See: “Whoops, Samsung workers accidentally leaked trade secrets via ChatGPT”).5

Given that we are still in the early days of generative AI, there is no reason to play fast and loose with contract data security via consumer-grade solutions. Take the time to understand what the risks are, and what solutions exist that can provide the safety your organization needs to confidently start applying generative AI to your most sensitive contract data.

3. Generative AI is not all AI

“AI” has been a buzzword since long before ChatGPT took the world by storm. While it might be tempting to see generative AI as supplanting all AI technology that came before it, the reality is a little more nuanced—and understanding that nuance can help organizations build more robust AI solutions, because it allows companies to leverage technology with a much longer track record of proven results.

Tech writer Bernard Marr described the distinction between “traditional AI” and generative AI this way:

“Traditional AI excels at pattern recognition, while generative AI excels at pattern creation.” Marr goes on to note that these two capabilities are not “mutually exclusive”: “Generative AI could work in tandem with traditional AI to provide even more powerful solutions. For instance, a traditional AI could analyze user behavior data, and a generative AI could use this analysis to create personalized content.”6

This is an important point for contract professionals to remember. AI trained to recognize patterns in contracts—for example, to understand what an obligation looks like for easier data extraction--has been commercially available for more than five years and will continue to serve a role even as generative AI sees wider adoption.

If your organization has not adopted any AI for contracting, take a holistic view of what is available—the “traditional” AI may provide opportunities for value that don’t come with the risk of adopting less proven solutions. Alternatively, it could augment the incredible power that generative AI offers.

4. Generative AI, alone, won’t digitally transform your organization

Impressive as it is, generative AI is just another technology, and deploying it will follow the same immutable law of physics that dictates all technology implementations: if no one uses it, it won’t deliver value. Alternatively, if users aren’t trained on how to best leverage the technology, they may use it, but get little value out of it (call this the law of “junk in, junk out.”)

Stories of failed digital transformations are legion, with McKinsey estimating that 69 percent of all digital transformation projects fail. Ample research shows that these projects fail not because the technology doesn’t work, but because users are not enabled to incorporate the new technology into their workday. In fact, they may spend a considerable amount of time and effort to get around the software – hardly the efficiency gain you’d hope for from your digital spend.

Wherever your organization is in digital maturity, it will pay to take an incremental approach7 to introducing generative AI into processes. Onboard your new AI partner like you would any new member of a team: start it out with small, isolated projects that provide value but don’t necessarily carry enterprise implications.

This will provide your organization with early learnings about what generative AI does well. It can also deliver small wins that gain trust and buy-in from stakeholders. This is critical: While the number of AI skeptics in the contracting world appears smaller, we all know that it only takes a few curmudgeons to bog down a digital transformation project.

Contract teams also will need robust enablement plans in place. This should include training on emerging fields like prompt engineering that will ensure the workforce sees generative AI as an augmenting force – and not a risk to their skillset. Technology companies and service providers with track records in artificial intelligence/machine learning (AI/ML) can also be a resource for empowering users.

Speaking to WorldCC recently, a general counsel noted: “If you haven’t adopted a digital culture, AI won’t help you.”

So how will you create your digital culture?

5. Contracts deserve priority in your generative AI strategy

As stated above, every corner of a business is looking at generative AI and how it can make a difference. This could create a traffic jam when it comes to asking for digital spend to buy solutions. This means there is a serious question we must ask and answer: where should organizations focus their energy first to have the biggest impact?

No doubt you agree that contracts deserve priority, but how will you prove this to your stakeholders, whether they represent your finance department, your T, or your department head?

A good place to start is with the enterprise-wide scope of contracts. By starting with digitized contracts, organizations address the foundation of their relationships with suppliers, customers, employees, and partners. In other words, AI transformation of contracts will act as a catalyst and accelerator for AI transformation of other systems and processes; but conversely, if contracts are left out, efforts to transform processes that rely on contracts will falter (procure-to-pay; quote-to-cash; hire-to-retire, etc.)

Get optimistic! Why not?

There is still so much we don’t know about where generative AI will take us. But there is ample reason to be optimistic that it will ultimately be a boon to the world of contracting and the commerce it supports.

Contracting leaders share in the optimism for the future

We have moved past the early trepidation about AI replacing human workers. A recent World Commerce & Contracting survey of contracting professionals found that 35 percent are optimistic about how AI will impact the contracting world versus the pessimistic 2 percent. Analysts at IDC came to a similar conclusion in a recent report: “Despite the headlines focusing on the replacement of lawyers, most respondents indicated that they expected AI to have more of an enhanced co-worker role. Essentially, functioning as an insource labor force for menial tasks.”3

Hype? Perhaps – but that beats disillusionment any day.

END NOTES

ABOUT THE AUTHOR

Drawing from her experiences as an assistant general counsel, law firm partner and legaltech entrepreneur, Bernadette Bulacan is the Chief Evangelist for Icertis, the contract intelligence company pushing the boundaries of what’s possible with CLM. At Icertis, she is charged with identifying trends and innovations affecting corporate legal departments and strengthening the bonds between Icertis and the corporate counsel community. Prior to Icertis, Bernadette held market development leadership roles at Thomson Reuters, in its legal professionals division, and QuantAI, an intellectual property analytics AI company. She was a founding employee and Assistant General Counsel of Serengeti Legal Tracker, which was acquired by Thomson Reuters.

ABOUT ICERTIS

With unmatched technology and category-defining innovation, Icertis pushes the boundaries of what’s possible with contract lifecycle management (CLM). The AI-powered, analyst-validated Icertis Contract Intelligence (ICI) platform turns contracts from static documents into strategic advantage by structuring and connecting the critical contract information that defines how an organization runs. Today, the world’s most iconic brands and disruptive innovators trust Icertis to govern the rights and commitments in their 10 million+ contracts worth more than $1 trillion, in 40+ languages and 90+ countries.

Return