Our article evaluates current issues surrounding the upcoming European Union (EU) regulation known as the Artificial Intelligence Act (AI Act).1 Proposed by the European Commission on 21 April 2021, AI Act seeks mainly to classify and regulate artificial intelligence applications based on their risk to cause harm. Such classification primarily falls into four categories: banned practices, high-risk systems, and other AI systems with limited and minimal or no risk.2

Regulating the use of AI solutions has gotten positive reviews prompted by the Generative AI solution revolution such as the AI tool ChatGPT (Chat Generative Pre-Trained Transformer), an artificial intelligence chatbot developed by OpenAI and launched on November 30, 2022.

But we have concerns about the fallout of this revolutionary regulation. What will it really do? Specifically, we ask…

- Will provisions be clear and precise, considering penalties for non-compliance?

- Will we find a clear balance between secure and unrestricted use of AI systems?

- And what about Google's decision to limit the availability of its new AI tool, Google Bard,3 a competitor to ChatGPT? Despite its release on the European market on 13 July 20234, Google’s move to temporarily delay Bard’s launch seemingly due to regulatory concerns still may be the first worrisome signal of global technology leaders suggesting that too restrictive regulations may limit the development of innovations in Europe.

Although Europe lags behind the United States and China in developing artificial intelligence (AI), Europe is way ahead in legislating its safe use, based on the European values and standards. As with the GDPR, the EU now could also become a global leader in regulating the use of AI. However, the EU faces a real challenge of striking the right balance between maintaining high standards of privacy protection and security for AI systems’ users and creating realistic conditions for their innovation development.

Behind it -- what’s ahead? Only time will tell!

The work performed on the regulation project that will soon regulate the use of artificial intelligence tools in Europe has been underway in the European Union since 2021 when the European Commission presented the preliminary draft of the Artificial Intelligence Act (AI Act). In mid-June of 2023, the European Parliament adopted its final version of the AI Act and the regulation now will progress to the final ‘trilogue’ stage of the E.U. regulatory process. This will involve negotiations on the final version of the regulation with the EU Council and the Commission which may conclude as early as this year (2023).

The goal of legislative efforts has been clear from the beginning: the use of solutions based on broadly defined artificial intelligence should be safe and respectful of fundamental rights, which form the backbone of European values. The focus on human-centric and ethically-driven AI development in the European market is intended to go hand-in-hand with ensuring freedom of operation and support for businesses seeking the advancement of new technologies.

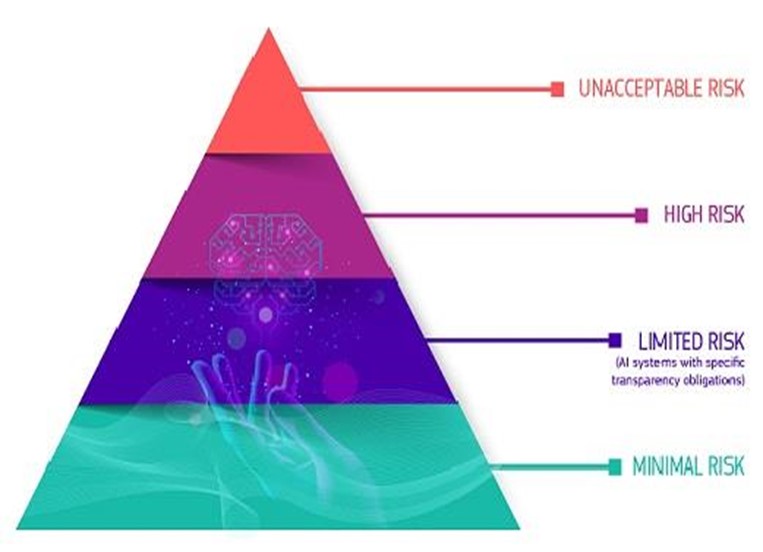

Ongoing discussions surrounding the regulation project, which continues to be a subject of intense debate, propose categorizing artificial intelligence systems into four categories based on the level of risk they may pose. These categories include unacceptable systems, which would be completely prohibited; high-risk systems, which would be subject to specific requirements before being allowed in the market; and systems with limited and minimal or no risk. We could call these four levels of risk the regulatory framework:5

- Unacceptable risk

- High risk

- Limited risk

- Minimal or no risk

Which systems will be completely prohibited?

First, systems that are contrary to the fundamental values of the EU and may violate fundamental rights will be prohibited by the AI Act. Two examples are the well-known social scoring system from China or the use of subliminal techniques that operate beyond a person's consciousness. Systems will also be prohibited that exploit any human vulnerabilities such as, age, social or economic situation, or physical or mental disability that might distort normal human behavior. Exceptions could be made, however, for any system designed for approved therapeutical purposes.

Beyond that, real-time remote biometric identification (e.g., facial recognition) in public spaces, as a rule, will be prohibited, as well as ex-post biometric identification, with exceptions for the most serious crimes and only with court approval. Similarly, profiling potential criminals based on their characteristics, prior offenses, or location is deemed unacceptable. AI systems for emotion recognition used in workplace, schools, crime prevention - as well as the extraction of facial images from the internet or CCTV footage to create facial recognition databases -- will likely also be prohibited.

What does this mean in practice?

From a business perspective, the most significant category will be high-risk systems, which will be allowed but subject to a catalog of limitations and obligations that form the core content of the regulation project.

Certainly, new responsibilities will be mandated for providers who offer artificial intelligence systems for managing critical infrastructure, education and vocational training, employee recruitment, or creditworthiness assessment. Such providers will be obliged, among other things, to:

- establish and maintain a risk management system throughout the entire lifecycle of AI system;

- introduce techniques that meet the quality criteria for data sets used for training and validating a model according to the latest version of the regulation project, as far this is technically feasible; and

- draw up a detailed technical documentation describing the AI system and containing information necessary for assessing the system's compliance with the regulation.

High-risk artificial intelligence systems will also need to be technically capable of automatically recording events (logs) while the high-risk AI systems is operating. Such systems need to be designed and developed to ensure sufficient transparency in their operations and allow for effective human oversight.

Obligations of limited-risk systems will not be as restrictive, but the key requirement will be to perform informational duties and ensure transparency. For example, users interacting with a virtual advisor (chatbot) will need to be clearly informed that they are interacting with an AI system.

On the other hand, computer game developers and providers of tools such as anti-spam filters can rest easy, because all evidence suggests that such systems will primarily be classified as minimal risk and will remain outside the scope of regulation.

The fact that the EU has decided to regulate this matter by using a regulation that will be directly effective in all member states – for both subject matter and territory -- means that we can soon expect a revolution like what businesses had to face with the introduction of the GDPR. The regulation will apply to a range of entities, including providers, importers, distributors, and users of AI systems. Providers placing on the market or putting into service AI systems in the Union will be subject to the regulation regardless of whether they are established within the EU or within a third country.

Similarly, the regulation would probably apply if the output produced by the AI system is intended to be used in the EU.

As with the GDPR, non-compliance with the provisions of the regulation will include the possibility of imposing significant financial penalties on businesses. For instance, in the case of violating the prohibition on introducing prohibited artificial intelligence systems to the market, the latest draft of the regulation proposes fines of up to €40 million euros (approx.$43.88 million USD) or 7% of the total worldwide annual turnover of the company from the previous financial year, which is significantly higher than the penalties under the GDPR.

Similarly, in the event of noncompliance with other key requirements related to high-risk artificial intelligence systems -- such as data governance -- the maximum penalty could reach €20 (approx.$21.94 million USD) or 4% of the total worldwide annual turnover of the company from the previous financial year. Lower penalties may be imposed for other violations, but that does not mean they will be less severe, especially for smaller firms.

The emergence of AI tools like ChatGPT or Midjourney has unexpectedly turned the table on which EU legislators seemed to be finalizing the shape of the AI Act. It turns out that the consequences of using text or image generators can pose much greater risks than anticipated. Initially, the draft regulation stated that users of an AI system that generates or manipulates image, audio or video content that appreciably resembles existing persons, objects, places or other entities or events have an obligation to disclose that the content has been artificially generated or manipulated.

During the legislative process, the need for ensuring transparency and reliability of generated content became apparent as well as protecting intellectual property rights associated with the referenced content. At this stage of the process, the aforementioned tools have been classified as part of the category of generative artificial intelligence, which imposes additional obligations on their providers to create the foundation models in such a way as to ensure adequate safeguards against the generation of illegal content and to document and make publicly available a sufficiently detailed summary of the use of training data protected under copyright law.

It is difficult to predict when we will know the final shape of the regulation and when its provisions will start to apply. Currently, a transitional period of two years from the date of its entering into force is expected. However, one thing is certain: just like with the GDPR, we will face an enormous amount of work to adapt our operations to the newly applicable regulations, including the need to avoid the specter of severe financial penalties for non-compliance.

The effort to regulate the use of AI solutions is widely regarded as positive, especially following the revolution sparked by the emergence of Generative AI solutions such as ChatGPT. However, as always, "the devil is in the details," leading to tumultuous discussions about the final shape of the revolutionary regulation to ensure its provisions are clear and precise, particularly considering the potential penalties for non-compliance.

Nonetheless, the greatest challenge remains finding a balance between secure and unrestricted use of AI systems. Google's decision to temporarily limit the availability of its new tool, "Google Bard" (a competitor to the already popular ChatGPT), in Europe, may be the first worrisome signal of global technology leaders bypassing the European market due to overly restrictive legal requirements.

Only time will tell if this was a one-off Google’s decision or an unfortunate side effect of increasing legislative changes and stricter demands in this area which may be repeated in the future. It's worth noting that just a few months ago, ChatGPT-3 was initially banned in Italy due to concerns about user privacy and a lack of transparency in data collection and processing. This confirms that regulatory authorities will not show leniency when it comes to ensuring compliance with GDPR for increasingly advanced AI systems.

Similar attitudes can be expected regarding the AI Act, although the two-year transitional period and companies' experience in implementing the GDPR in recent years may somewhat facilitate the process of transformation and adaptation to new requirements. Naturally, restricting the availability of innovative solutions and thus slowing down technological development due to the AI Act would be a proverbial throwing out the baby with the bathwater, which is the primary concern of business representatives and opponents of excessive AI regulation and new technologies (legislation always lags scientific advancements, particularly in rapidly evolving sectors).

The numerous concerns about the AI Act project require ongoing monitoring. Nevertheless, even now, entrepreneurs utilizing AI-based solutions in their products or organizations should prepare for forthcoming, inevitable changes.

END NOTES

- The Artificial Intelligence Act: What is the EU AI Act? THE AI ACT newsletter article; see also An Introduction to the EU AI Act, article by Brad Hammer and Carissa Maher, June 26, 2023. Wikipedia analysis

- EU article Shaping Europe’s Digital Future, Proposal for a Regulation laying down harmonised rules on artificial intelligence, updated 20 June 2023

- Google Bard – Ref also article What is Google Bard?

- Google - July Bard update: New features, languages, countries (blog.google)

- EU article Shaping Europe’s Digital Future, Regulatory framework proposal on artificial intelligence, updated 20 June 2023

ABOUT THE AUTHORS

Adriana Jarczyńska

Senior Manager, Contract Management Services at Nexdigm (https://www.linkedin.com/company/nexdigm/). She is an attorney-at-law (LL.M.) with over ten years of professional experience in law firms and international corporations. She is a specialist in personal data protection law (CIPP/E) and new technologies law.

Kinga Łabanowicz

Senior Manager, Contract Management Services at Nexdigm (https://www.linkedin.com/company/nexdigm/). She is an attorney-at-law with past experience as a director of legal departments in international firms, legal manager, and expert in civil, commercial, and new technologies law.

ABOUT NEXDIGM

Nexdigm provides integrated, digitally driven solutions encompassing business consulting, business services, and professional services that help businesses navigate challenges across all stages of their life cycle. This employee-owned, privately held, independent global organization strives to help companies across geographies meet the needs of a dynamic business environment. Through their direct operations in the USA, Poland, United Arab Emirates (UAE), and India, they serve a diverse range of clients, spanning multinationals, listed companies, privately-owned companies, and family-owned businesses from over 50 countries.

Return